Thursday, May 26, 2011

Blog Update

CRM 2011 email Router load Data Error

I've been over and over the steps outlined in the CRM 2011 Email Router Installation Guide and cannot identify the reason the email router configuration is failing on "Users, Queues and Forward Mailboxes"/Load Data."

Error: Cannot retrieve user and queue information from CRM Server. May indicate ... server is busy. Verify URL is correct.... this problem can occur if specified login credentials are insufficient...

We have a CRM 2011 deployment which is accessible and working through browser http://crm2k11.OrganizationName.com. It is authenticating against a Win2003 Active Directory.

We have also installed crm 2011 email router on the same machine.The problem is that when we try to load the user data and queue we are getting the attached error.

Can you please inform us of the points we can check in order to get this working.

these were issues so we open a support incident for this situation and here what we did to resolve the issue.

- We reviewed the trace information that was captured and checked both your and an administrator user.

- We attempted to access the organization.svc page within Internet Explorer. We were able to see that it was looking for a physical pathing of D:\Program Files\Microsoft Dynamics CRM\CRMWeb\XRMServcies\2011\Organization.svc.

- We discussed that you had previously installed CRM on the D:\ on this machine but were getting issues with that so had uninstalled and then installed on the C:\.

- We then created a new organization and were able to both access the organization as well as the Organization.svc page.

- We then attempted to remove and reimport the organization and that did not work.

- We did a search through the registry for references to the organization service and found several in the HkeyCU\Software\Microsoft\Windows\CurrentVersion\Explorer\RunMRU that were pointing to D:\Program Files\Microsoft Dynamics CRM instead of C:\Program Files\Microsoft Dynamics CRM. We corrected these references but were still unable to access the Organization.svc page.

- We then removed the organization and modified the name on it. With the new organization name we were able to access the Organization.svc page.

- When attempting to load data we were then seeing the message below.

- The decryption key could not be obtained because HTTPS protocol is enforced but not enabled. Enable HTTPS protocol and try again.

- We were able to get past that message by using the steps below.

- If the SSL is not in use, a new regkey has to be added:

1. Click Start , click Run , type regedit , and then click OK .

2. Locate and then click the following registry key:

HKEY_LOCAL_MACHINE\SOFTWARE\Microsoft\MSCRM

3. On the Edit menu, click New , and then click DWORD Value .

4. Set the name of the subkey to DisableSecureDecryptionKey .

5. Right-click DisableSecureDecryptionKey , and then click Modify .

6. In the Value data box, type 1 in the Value data field, and then click OK .

7. On the File menu, click Exit.

10) We then got a message saying that the user needed to be PrivUserGroup.

11) We added your user to the PrivUserGroup and were able to get the load data to complete.

12) We then discussed the setup of the forward mailbox that you are using in your current production environment.

13) We also discussed setting up IFD for CRM 2011. I have the document on the setup linked below for you. Any questions on that would need to be in a new case.

http://www.microsoft.com/downloads/en/details.aspx?FamilyID=9886ab96-3571-420f-83ad-246899482fb4

14) We discussed that you were going to do a switch over from your production to this environment in the near future.

You will want to make sure you check all of the items below for the scenario we discussed.

1) You will want to make sure that you are importing the database instead of just restoring over the top of the organization. This is for several reasons, the biggest being the name change that we put into place.

2) You will want to be sure that when you switch the binding on the website to utilize the host header that it is changed in the following locations:

Deployment Manager – Right click on Microsoft Dynamics CRM and select Properties and the web address tab.

IIS

E-mail router – This will need to be done after the Deployment Manger modification.

If you do run into issues with the organization during the migration of your production and the binding changes you will need to open a new case as that would be outside of the scope of this case which was to get the Router Configuration Manager to load user and queue data. - one last thing make sure that you have approved email from setting

As we are running CRM 2011 as dogfood, I found an interesting new feature of CRM 2011. When adding a new user, you have to approve their email for it to work properly, for instance in Outlook where you otherwise will get an error saying that the sending user does not have an email address.

So, if you get this error, just add an email address and press the "Approve Email" button in the ribbon.

at last there was dash(-) in our organization Name which we removed now everything is working perfectly.

What's new SharePoint Server 2010

it’s a presentation for Microsoft I am not sure if it is right to publish it or not but I couldn’t find any.

Enable Tracing in CRM 2011

Register the cmdlets

- Log into the administrator account on your Microsoft Dynamics CRM server.

- In a Windows PowerShell window, type the following command:

Add-PSSnapin Microsoft.Crm.PowerShell

This command adds the Microsoft Dynamics CRM Windows PowerShell snap-in to the current session. The snap-in is registered during installation and setup of the Microsoft Dynamics CRM server.

Get a list of the current settings. Type the following command:

Get-CrmSetting TraceSettings

The output will resemble the following:

CallStack : True

Categories : *:Error

Directory : c:\crmdrop\logs

Enabled : False

FileSize : 10

ExtensionData : System.Runtime.Serialization.ExtensionDataObject

Set the Trace Settings

- Type the following command:

$setting = Get-CrmSetting TraceSettings

- Type the following command to enable tracing:

$setting.Enabled="True"

- Type the following command to set the trace settings:

Set-CrmSetting $setting

- Type the following command to get a current list of the trace settings:

Get-CrmSetting TraceSettings

The output will resemble the following:

CallStack : True

Categories : *:Error

Directory : c:\crmdrop\logs

Enabled : True

FileSize : 10

ExtensionData : System.Runtime.Serialization.ExtensionDataObject

make sure you have created the directory CRM server will not bother itself to create a directory for you rather it will throw internal exception in deployment manager.

Wednesday, May 25, 2011

CRM 2011 CRMservice.asmx 401 unauthorized

recently updated from 4.0 to 2011 found a link stating that don’t have to write whole chunk of code rather I can use this url for backward compatibility.

http://msdn.microsoft.com/en-us/library/gg334316.aspx

so become more than happy however code was not working as required and throwing 401 upon creating lead and cases. so I had to to following things

1) I had to remove existing web reference and had to update it with

http://<servername[:port]>/mscrmservices/2007/crmservice.asmx?WSDL&uniquename=organizationName

details here

http://msdn.microsoft.com/en-us/library/cc151015.aspx

then I had to add this code segment on

CrmAuthenticationToken token = new CrmAuthenticationToken();

token.OrganizationName = "OrganizationName";

_crmService = new CrmService();

_crmService.Credentials = System.Net.CredentialCache.DefaultCredentials;

_crmService.CrmAuthenticationTokenValue = token;

_crmService.PreAuthenticate = true;

_crmService.Credentials = new System.Net.NetworkCredential(testdomainlogin", "testdomainpassword", "EA");

source http://social.microsoft.com/Forums/en/crmdevelopment/thread/fd97215a-454f-4875-b7c9-9b2fbefa0f10

and eureka its working man.. took my 3 hours

Thursday, April 28, 2011

CRM 2011 – Sample data

CRM 2011 makes importing the sample data quick and easy. Importing the Sample Data isn’t difficult but it is frustrating when you are not sure where to look

You log in with Administrator rights

go to

Settings

Systems/Data Management

Sample Data

it then asks if you want to install it, press Install Sample Data button.

now you can look around CRM, the dashboards have sprung into life and your organization has enough data for you to do some customer demo’s or practice development.

The feature provides the following sample data:

Entity

Records

Accounts

14

Campaigns

8

Cases

14

Contacts

14

Leads

10

Opportunities

15

Phone Calls

10

Subjects

2

Tasks

14

Goals

4

Sources

http://www.avanadeblog.com/xrm/2010/09/crm-2011-feature-of-the-week-9202010-sample-data.html

and

http://crmbusiness.wordpress.com/2011/02/03/crm-2011-how-to-quickly-add-sample-data/

Monday, April 25, 2011

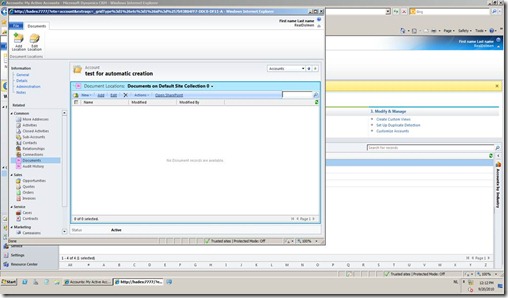

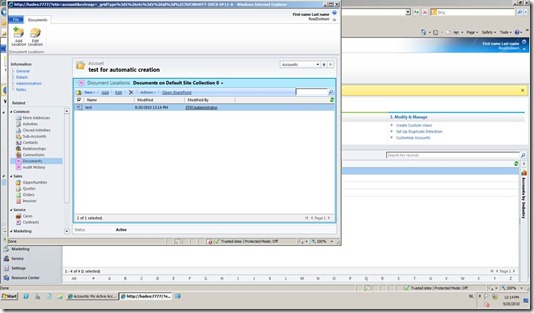

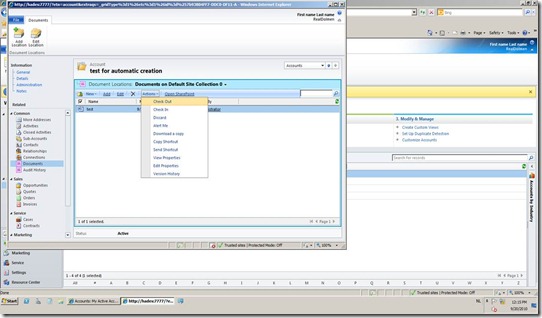

Sharepoint 2010 and CRM 2011 Out of the Box Integration

CRM can be integrated with SharePoint easily with this new tool called as CRM list component. Once that is done CRM can be configured using the Setting menu to enable the entities for the sharpening collaboration. In addition one needs to specify the Url for the site that is configured for integration (where the component was installed). Once this is done the integration is complete and on addition of documents in CRM a new library is created in SharePoint. Existing libraries can also be added to the entities. Each entity with documents would have a library association with the address in format “siteaddress/Acocunts/Account Name/Entity Name/ account/”. This is pretty much it as far as this integration is concerned. Integration is pretty neat however there are a few minor glitches that are still there so it’s better to do demo on the machine where both are installed. For demo I have done this part however another type of integration can be done where CRM data can be shown in SharePoint home page which requires some additional coding and development.

http://raotayyabali.wordpress.com/2010/11/09/crm-2011-integration-with-sharepoint-foundation-2010/

Wednesday, March 16, 2011

Database development considerations

Many of us intentionally or unintentionally do not plan application Background for high availability website or application. This create frustration at both end, I mean it exhausts developers that they are unable to develop a robust application on the same token it frustrates the client who is not getting optimum performance from the application. Purpose of following note is to create a check list that should be complied before delivering any product for quality assurances. I coloring the points, red mean critical, blue means important and green means supportive.

1. Do plan database for its purpose, if it is for online transaction processing it should be normalized and should not violate normalization rules. No matter how much it boosts the database processing. Please consider following notes for OLTP databases.

i. Create multiple file groups for multiple set of tables like a file group for indexes, file group for lookup items and a file group for transaction. It’s better to create multiple groups to optimize disk IO.

ii. Make sure that your transactions are precise and they are short enough to avoid deadlocks. These transactions should be developed in a way that single stored procedure is handling a complete set of insertion or update.

iii. Database backups should be configured at minimum usage hours, although SQL server supports transactions while taking backups however the client responses become slower during backup.

iv. Make sure that you are using table partitioning if there is a large amount of data, because a huge table size has a worse impact on tables’ insertions and updates.

v. When trying to develop OLTP part of any application make sure that you are using minimum number of indexes, it has a negative impact on performance.

vi. Optimum hardware configuration to handle the large numbers of concurrent users and quick response times required by an OLTP system.

vii. Try to configure server to support max degree of parallelism, by default this attribute is disabled and SQL server does not perform parallelism for queries, so server performance can be enhanced by enabling this feature.

viii. increase the 'min memory per query' option to improve the performance of queries that use hashing or sorting operations, if your SQL Server has a lot of memory available and there are many queries running concurrently on the server.

ix. You can increase the 'max async IO' option if your SQL Server works on a high performance server with high-speed intelligent disk subsystem.

x. With usage of table indexes become really slow, it’s better to run dbcc dbreindex with a proper fill factor regularly to optimize performance.

xi. You are using database with full recover option and mirroring is configure then it’s better to increase the recovery interval. This will have a positive impact on database performance.

xii. If database requires a lot number concurrent request try to increase max number of work thread and you can also use the priority boost to 1. Make sure to increase priority only if the server is dedicated for SQL server operations otherwise it will have negative impact on application due to sql server priority.

xiii. Too many table joins for frequent queries. Overuse of joins in an OLTP application results in longer running queries & wasted system resources. Generally, frequent operations requiring 5 or more table joins should be avoided by redesigning the database.

xiv. Too many indexes on frequently updated (inclusive of inserts, updates and deletes) tables incur extra index maintenance overhead. Generally, OLTP database designs should keep the number of indexes to a functional minimum, again due to the high volumes of similar transactions combined with the cost of index maintenance.

xv. Big IOs such as table and range scans due to missing indexes. By definition, OLTP transactions should not require big IOs and should be examined.

xvi. Unused indexes incur the cost of index maintenance for inserts, updates, and deletes without benefiting any users. Unused indexes should be eliminated. Any index that has been used (by select, update or delete operations) will appear in sys.dm_db_index_usage_stats. Thus, any defined index not included in this DMV has not been used since the last re-start of SQL Server.

xvii. Signal waits > 25% of total waits. See sys.dm_os_wait_stats for Signal waits and Total waits. Signal waits measure the time spent in the runnable queue waiting for CPU. High signal waits indicate a CPU bottleneck.

xviii. Plan re-use < 90% . A query plan is used to execute a query. Plan re-use is desirable for OLTP workloads because re-creating the same plan (for similar or identical transactions) is a waste of CPU resources. Compare SQL Server SQL Statistics: batch requests/sec to SQL compilations/sec. Compute plan re-use as follows: Plan re-use = (Batch requests - SQL compilations) / Batch requests. Special exception to the plan re-use rule: Zero cost plans will not be cached (not re-used) in SQL 2005 SP2. Applications that use zero cost plans will have a lower plan re-use but this is not a performance issue.

xix. Parallel wait type cxpacket > 10% of total waits. Parallelism sacrifices CPU resources for speed of execution. Given the high volumes of OLTP, parallel queries usually reduce OLTP throughput and should be avoided. See sys.dm_os_wait_stats for wait statistics.

xx. Consistently low average page life expectancy. See Average Page Life Expectancy Counter which is in the Perfmon object SQL Server Buffer Manager (this represents is the average number of seconds a page stays in cache). For OLTP, an average page life expectancy of 300 is 5 minutes. Anything less could indicate memory pressure, missing indexes, or a cache flush.

xxi. Sudden big drop in page life expectancy. OLTP applications (e.g. small transactions) should have a steady (or slowly increasing) page life expectancy. See Perfmon object SQL Server Buffer Manager. Small OLTP transactions should not require a large memory grant.

xxii. Sudden drops or consistenty low SQL Cache hit ratio. OLTP applications (e.g. small transactions) should have a high cache hit ratio. Since OLTP transactions are small, there should not be, big drops in SQL Cache hit rates or consistently low cache hit rates < 90%. Drops or low cache hit may indicate memory pressure or missing indexes.

xxiii. Normally it takes 4-8ms to complete a read when there is no IO pressure. When the IO subsystem is under pressure due to high IO requests, the average time to complete a read increases, showing the effect of disk queues. Periodic higher values for disk seconds/read may be acceptable for many applications. For high performance OLTP applications, sophisticated SAN subsystems provide greater IO scalability and resiliency in handling spikes of IO activity. Sustained high values for disk seconds/read (>15ms) does indicate a disk bottleneck.

xxiv. High average disk seconds per write. The throughput for high volume OLTP applications is dependent on fast sequential transaction log writes. A transaction log write can be as fast as 1ms (or less) for high performance SAN environments. For many applications, a periodic spike in average disk seconds per write is acceptable considering the high cost of sophisticated SAN subsystems. However, sustained high values for average disk seconds/write is a reliable indicator of a disk bottleneck.

xxv. Big IOs such as table and range scans due to missing indexes.

xxvi. Index contention. Look for lock and latch waits in sys.dm_db_index_operational_stats. Compare with lock and latch requests.

xxvii. High average row lock or latch waits. The average row lock or latch waits are computed by dividing lock and latch wait milliseconds (ms) by lock and latch waits. The average lock wait ms computed from sys.dm_db_index_operational_stats represents the average time for each block.

xxviii. Block process report shows long blocks. See sp_configure “blocked process threshold” and Profiler “Blocked process Report” under the Errors and Warnings event.

xxix. High number of deadlocks. See Profiler “Graphical Deadlock” under Locks event to identify the statements involved in the deadlock.

xxx. High network latency coupled with an application that incurs many round trips to the database.

xxxi. Network bandwidth is used up. See counters packets/sec and current bandwidth counters in the network interface object of Performance Monitor. For TCP/IP frames actual bandwidth is computed as packets/sec * 1500 * 8 /1000000 Mbps.

a. Excessive fragmentation is problematic for big IO operations. The Dynamic Management table valued function sys.dm_db_index_physical_stats returns the fragmentation percentage in the column avg_fragmentation_in_percent. Fragmentation should not exceed 25%. Reducing index fragmentation can benefit big range scans, common in data warehouse and Reporting scenarios

2. If you are developing a database for decision support system or for analytical processing please follow following general rules and guidelines.

a. Better to develop data marts based on database trend analysis requirements.

b. Merge non key attributes with key attribute using views and tables for optimum retrieval.

c. Use of a star or snowflake schema to organize the data within the database.

d. Estimate the sizes of clustered and non-clustered indexes.

3. Now i am trying to list down general guidelines for database optimization.

a. If database size is less than 200mb then it’s better to use file growth in megabytes. However if it increase better to define in percentages.

b. Do not insert heavy object in the database if they are not required in transactions because they increase database size and it really hurts the performance.

c. If you have really a lengthy operation in the stored procedure better to use SQL query short circuiting, this enhance the query performance by avoiding unnecessary joins and where clauses.

d. Must use where clause to otherwise it will slow down the performance. Even if there is an option that say search all do not fetch all rows from database.

e. Try to implement paging in stored procedure level for large amount of data; if this is to be done on front end it will cause timeout operations.

f. Do not use char, varchar fields’ for comparison operations and joins, it better to use integers always. Use isnull function for parameters to avoid unwanted comparisons in the database.

g. Always prefer to mention fields names rather than * operations, because wildcards are negative for performances.

h. Use views and stored procedure instead of heavy queries. Do not use cursors, try to avoid count (*).

i. Since triggers are heavy for operations better to use constraints, this helps to validate data prior to insert.

j. It is preferred to use table variables rather than temp tables but it better to check either you are in a transaction or not and if this transaction is handle by MSDTC. You may face invalid data operations with usage of variables because they not terminated properly.

k. Do not use having or distinct in your queries because require reprocessing of result set.

l. Prefer to use locking hints with queries, as in a previous document I mentioned to use nolock or rowlock or updlock.

m. Use select statements with the TOP keyword or the SET ROWCOUNT statement if you need to return only the first n rows. OR Use the FAST number_rows table hint if you need to quickly return 'number_rows' rows.

n. Prefer to use union all instead of union in queries.

o. Do not guide SQL for query optimization hints if you are now sure about them, better to leave this part with SQL server to decide.

p. Don’t use top 100 percent with in your sql statements this switch guides the sql server to fetch a count of 100 rows based on the data sampling. This clause have really negative effect on the query.

q. Try to use latest feature of SQL server cross apply rather using cursors.

Thursday, March 10, 2011

Web UI Considerations

Following are some Guidelines, Suggestions and recommendation for designing web applications please do consider these for site performance and SEO as they are really helpful. This is the first version of guidelines I will try to draft more in coming days.

· Do your layout with divs

· Please don’t use tables even though they work fine

· Describe the DOCTYPE so the browser can relate

· Check in all browsers

· Title everything including links and images

· Don’t use <b(old)>, please use <strong> ’cause if you use <b(old)> then it’s all then wrong. All other obsolete tags should be avoided and use tags for HTML 4.0.

· Do not use GIF format of images because it does not support enrich graphics

· Use Resolution of 1024X768 as 56~65% internet users has this resolution

· Use common fonts like serif, sans-serif, Arial. because if you use special fonts which are not installed on client machine it will cause the site to be displayed in browser default font.

· do consider Accessibilities option because almost 40% of overall internet user suffer due to some disabilities

· do not use bgcolor

· do mention the width of li Items as they treated differently within each browsers

· use Breadcrumbs in navigation so the user could understand where he/she is

· Navigations should not be in JavaScript because it makes complicated for the crawlers

· for better CSS understanding use ASP.NET control Adapters to apply perfect CSS

· do not Use server side includes as they poses many issues for the UI designs use Master pages instead

· use User Controls only when required because 1. you don’t know who it will look like until you reach the browser,2. state management is really difficult and it cases more server load than normal, 3) reusability causes more control flows which ultimately effects the performance.

· When working master page do use content templates in header to customize meta elements for each content page

· to transfer data between master class and content classes use public properties or user mastertype or property master

· if only 1 master page is enough then mention this in web.config instead of mentioning on each page

· use nested master pages only when requires

· Utilize Master pages for Header and footer.

· Utilize ASP.Net content pages to design inside pages.

· Use server side asp.net controls for everything, instead of html.

· Create XML Sitemaps.

· Create custom error page (404). Showing error to the user not only frustrates but it also cause server vulnerable to oracle attack

· Always use character code when it’s available like for trademark, registered, copyright sign, etc.

· Avoid Inline CSS

· All design tags are supposed to be in external CSS file, no inline style tags.

· Do not use spaces ( ) for layout purposes; use CSS instead.

· Use of 301 and 302 redirects.

· to make controls consistent use themes instead of native attributes specially with container controls like, panel, gridview and data repeaters

· since themes apply after the CSS you can StyleSheetThems where you want to override the theme with CSS

· make sure that your page size and JavaScript code are not heavy since JavaScript is interpreted so a large page volume will affect the page performance.

· Use Viewstate objects only when they are required because they are heavy and encrypted causing more data flow back and forth wither the server.

· Encrypt information in web.config because an attacker can download the web.config

· Use regular expression instead of loops in JavaScript to Optimize page performance.

· When a page is getting larger enough do consider information splitting options. Never try to make web application as windows form.

For someone who don’t want to read whole guidelines can listen to this

Developers SEO Considerations

Every day we come office to develop applications, however it is really important if these application works better and able to gather more and more customers. following is a list of point that must be considers when develop a web application or a website in order to make sure that it will work perfectly for the search engines.

· Make sure each new page in a web site has it title text and it referenced though likes or site maps. pages without a reference gets lower priority by the search engines

· make sure that you are using headings instead of bulky images. all search engines considers headings for indexing rather images.

· on page content make sure that you have a heading (H1) with exactly same title as of page furthermore located as far to the top and left of page as possible

· Make sure that headings are properly mentioned on page and it they use heading tags like h1,H2, H3 rather font size. moreover each heading should be strong for SEO

· Make sure that title of the page is meaningful for example instead of page1 it should "TRxLink-homepage".

· Public site content should be HTML rather than Flash, Images, Silver Light, JavaScript’s

· Make sure that you not using Frame at any cost. no matter what happens Avoid Frames.

· site should not use scripts for redirection for example we have web site in a folder abc and a script file on root website redirects is to the folder where we have index page. this site will never will indexed by Search engine because of redirection

· make sure each page has a meta tag and it has meaningful phrases like "Online Purchasing system", these meta should be less than 64 words a large is treated as spam by search engines and they usually keep its rating lower

· make sure that meta key words and meta descriptions are meaningful to the page. if a page content has nothing to do with meta descriptions and keywords it ratings ultimately dropped by search engine due because it is treated as scam for SEO

· make sure that meta tags are listed in order of importance not arbitrarily furthermore it should Meta keywords and description include singular, plural and variations in spelling of core keywords. for example Purchase order, Purchase Orders, Online Purchase order, Online Purchase Orders, PO, Online PO

· make sure Meta description tag is present on each page and properly filled with keywords which are relevant to page.

· make sure that image names on pages should be properly defined not just img1, img1, imgheader because some engines confirms page meta with images names

· make sure all the clickable images have alt tags that include appropriate keywords.

· make sure that key phrase appropriately sprinkled throughout the page’s text content.

· each image on content page should have height and width attribute

· make sure that menus are static and not loading from databases dynamic and exploding menus often skipped by Search engines

· all hyperlinks or <A> are wrapped properly with keywords for long pages

· make sure all pages have header and footers for consistency and SEO

· make sure site map is available in html, xml formats and it includes all pages.

· make sure to use title and Alt for all important tags like images and hyperlinks.

· make sure page body has at least 150 words of description which is static otherwise page will be skipped

· if changing site content place sure that you have configured 301 redirects for all old pages to new pages.

· Make sure your analytics code has been appropriately placed on all pages within the new site.

· Check for any broken navigation, internal or external links. – I suggest doing this a second time as you launch just be certain everything is working as intended

· make sure each page is having different content otherwise your page rank will be decreased

· below is a list of html tags and their preference in search engines try use important ones.

| Tag Name | Essential | Somewhat Important | No Influence | Unfavorable |

| <h1>...<h6> | ● | |||

| <title> | ● | |||

| <b> | ● | |||

| <i> | ● | |||

| <img> | ● | |||

| <meta> | ● | |||

| <u> | ● | |||

| <a> | ● | |||

| <body> | ● | |||

| <br> | ● | |||

| <center> | ● | |||

| <font> | ● | |||

| <head> | ● | |||

| <li> | ● | |||

| <ol> | ● | |||

| <p> | ● | |||

| <table> | ● | |||

| <td> | ● | |||

| <tr> | ● | |||

| <ul> | ● | |||

| <frame> | ● | |||

| <frameset> | ● |

Tuesday, March 1, 2011

HOW TO: Enable the build-in Administrator account in Windows Vista

1. Click Start, and then type cmd in the Start Search box.

2. In the search results list, right-click Command Prompt, and then click Run as Administrator.

3. When you are prompted by User Account Control, click Continue.

4. At the command prompt, type net user administrator /active:yes, and then pressENTER.

5. Type net user administrator

Note: Please replace the

6. Type exit, and then press ENTER.

7. Log off the current user account.

Thursday, February 10, 2011

Email Enabled document Libraries in share point

Although its simple and you use best document available on internet by www.combined-knowledge.com

there is only 1 catch that was left in the document and every time configure it stuck with the same issue that we need to configure a virtual SMTP server with the same name as of you DNS name like in my I had project.abcdomain.com now SMTP virtual server be with this named instance, default instance will not work I don’t know but this is what happens to me always and I wasted hours and hours in debugging whole exchange and sharepoint.

Document available here

Tuesday, February 8, 2011

Trailing slash at the end of aspx page name

so many days in searching and so many days without a solution a long running problem at my end but finally I found a solution.

the problem is actually when you append a slash at the end of any aspx page it does not generate 404 rather it display same page without any CSS or images because not IIS treat this a folder rather than as a page. however there no clear way resolve this issue. and the SEO people were shouting and make noises as usual that their page rating is going down due to mutiple page with same content means let say

aboutus.aspx

aboutus.aspx/

and

aboutus.aspx/default.aspx

are three wrong pages with same content. so resolve this issue with the help of pageinfo variable, if there is any request which has some pageinfo then redirect it to same url without page info in global.asax see following code snippet.

public void Application_BeginRequest(object sender, EventArgs e)

{

if (!String.IsNullOrEmpty(Request.PathInfo))

{

Response.Redirect(Request.RawUrl.Remove(Request.RawUrl.LastIndexOf("/")));

}

//throw new HttpException(404,"File not found.");

}

http://msdn.microsoft.com/en-us/library/system.web.httprequest.pathinfo.aspx#Y280

Friday, January 21, 2011

Flat File pipeline in BizTalk 2010

thing are getting changed day by day and lazy enough to understand these. for example today when I deployed a BizTalk solution I got the error something like missing project resource Please verify that the pipeline strong name is correct and that the pipeline assembly is in the GAC.

upon investigation I realized that now BTS projects are not deployed to the GAC I don’t know but now we have this situation so you have to manually add the project to global Assembly Cache using GaCUtil –I “Assemblyname.dll” once you do that then you application will start working fine. I also learn that now there are two separate GACs one for .NET version 4.0 and one for old .net application. so whenever you get an error like this don’t forget to add your project file to GAC.

In .NET Framework 4.0, the GAC went through a few changes. The GAC was split into two, one for each CLR.

The CLR version used for both .NET Framework 2.0 and .NET Framework 3.5 is CLR 2.0. There was no need in the previous two framework releases to split GAC. The problem of breaking older applications in Net Framework 4.0.

To avoid issues between CLR 2.0 and CLR 4.0 , the GAC is now split into private GAC’s for each runtime.The main change is that CLR v2.0 applications now cannot see CLR v4.0 assemblies in the GAC.

It seems to be because there was a CLR change in .NET 4.0 but not in 2.0 to 3.5. The same thing happened with 1.1 to 2.0 CLR. It seems that the GAC has the ability to store different versions of assemblies as long as they are from the same CLR. They do not want to break old applications.

See the following information in MSDN about the GAC changes in 4.0.

For example, if both .NET 1.1 and .NET 2.0 shared the same GAC, then a .NET 1.1 application, loading an assembly from this shared GAC, could get .NET 2.0 assemblies, thereby breaking the .NET 1.1 application

The CLR version used for both .NET Framework 2.0 and .NET Framework 3.5 is CLR 2.0. As a result of this, there was no need in the previous two framework releases to split the GAC. The problem of breaking older (in this case, .NET 2.0) applications resurfaces in Net Framework 4.0 at which point CLR 4.0 released. Hence, to avoid interference issues between CLR 2.0 and CLR 4.0, the GAC is now split into private GACs for each runtime.

As the CLR is updated in future versions you can expect the same thing. If only the language changes then you can use the same GAC.

source and http://www.techbubbles.com/net-framework/gac-in-net-framework/

Tuesday, January 18, 2011

Diagnosing Routing Failures in BizTalk Server 2006

BizTalk Server 2006 offers some tools that can make it easier to diagnose routing failures in your BizTalk deployments, with the help of the new query facilities in the BizTalk Server 2006 Administration Console. Imagine for example that you've got an incoming message through a receive location that failed routing and was suspended.

The first thing you'll want to do is open up the BizTalk Server 2006 Administration Console, go to the Group Hub page and create a new query. To diagnose a routing failure, you can do two different queries.

Querying for Messages

The first possible query is to query for messages. If a routing failure occurred, you will find the message listed in the results pane in the "Suspended (resumable)" state, as the following screenshot shows:

The first record you see in the screenshot above (the one where the MessageType field is empty) is the actual message that failed routing. If you double click on it, you will see a new dialog window open where you can see the message details, as well as the message context and even the content of each part associated with the message. This is one of my favourite windows in the new UI because it is much more usable than the old HAT windows.

The second record you see in the results pane, however, is far more interesting. This one is actually a Routing Failure Report pseudo-message, which has no actual body, but contains the real state (and message context) of the message at the point the routing failure occurred. You can always recognize an RFR because its Service Class field contains the value "Routing Failure Report".

Since the Routing Failure Report contains the real message context, you can use it to check if the expected context properties were promoted, and what the values of them were. So this is the place to look for if you feel that perhaps the routing problem occurred because your pipeline or adapter didn't promote the right values or the right properties.

Querying for services

You can also start by querying for suspended service instances (message receives/sends are also service instances):

The Messaging Service Instance is the actual instance that was suspended. By opening up the Service Details window, you can look at the exact error message that was logged in the Error Information tab:

The published message could not be routed because no subscribers were found. This error occurs if the subscribing orchestration or send port has not been enlisted, or if some of the message properties necessary for subscription evaluation have not been promoted. Please use the Biztalk Administration console to troubleshoot this failure.

You can also see the list of messages associated with this service instance (in this case only one) in the Messages tab of the window.

By analogy, the Routing Failure Report instance is a service instance associated with the routing failure report; from here you can get access to the Routing Failure Report message as well.

Troubleshooting the routing failure

One really interesting option here is that you can right-click on either of the suspended service instances in the Query Results pane and you'll see a new "Troubleshoot Routing Failure" submenu with some options to help you diagnose why the routing failure happened:

- The Find failed service instance option will run a new query that will find you the specific service instance that failed. It is not as useful in the above example because we already new exactly what service instance it was (it had been already returned in the original query), but in cases where you have hundreds of service instances, this can make it a lot easier to find the relevant instance.

- The Show all subscriptions option will open a new query window that queries for all subscriptions defined in the message box.

- The Show all active subscriptions option is the same as above, but it filters the results so that only subscriptions in an Active state are returned.

- The How to troubleshoot routing failures option will open up the help topic on troubleshooting routing failures in the BizTalk help.

As you can see, BizTalk Server 2006 makes it much easier to see what’s going on in your environment and does offer a few new options integrated into the management console itself; instead of having to go through HAT to view all this stuff. Also, the new UI is great, far more usable than what HAT provided (though you still need to go to HAT for some scenarios including orchestration debugging), and gives you a much more integrated view of how subscriptions, service instances and messages relate to each other.

http://winterdom.com/2006/03/diagnosingroutingfailuresinbiztalkserver2006

Tuesday, January 11, 2011

How to Remove Watermark (Build Info) from Desktop in Windows Vista, 7 and Server 2008 (Both 32-bit and 64-bit) Including All Beta Builds and Service Packs

When we install a Beta or RC build of Windows Vista, 7 or Server 2008, or when we install a Beta or RC version of a Service Pack e.g. SP1, SP2, etc, a watermark is shown on Desktop which looks similar to following screenshot:

"Evaluation Copy", "For testing purpose only", "Test Mode", "Safe Mode" or similar text is shown in the watermark on Desktop.

It looks ugly when you use dark wallpapers and becomes irritating sometimes. If you also don't like this watermark and want to get rid of it, here are 2 great tools to remove this watermark:

- Universal Watermark Remover

- Remove Watermark

Universal Watermark Remover

"Universal Watermark Remover" is an excellent small and portable utility created by "Orbit30" which can remove the ugly watermark from Windows Vista, 7 and Server 2008 Desktop. It works for both 32 and 64-bit versions of Windows.

Just download it using the above link, run the EXE file and follow the instructions. You'll need to restart your system to take affect.

Remove Watermark

"Remove Watermark" is another awesome portable tool created by "deepxw" which can remove watermark from Windows Vista, 7 and Server 2008 Desktop. It works for both 32-bit and 64-bit versions. Even it works for all languages and service packs.

Download the ZIP file, extract it and run the correct EXE file for your Windows version. It'll ask for confirmation, press Y to confirm and patch the system file to remove watermark.

64-bit version users will also need to rebuild MUI cache. After patching file, run the tool again and press R to rebuild MUI cache.

PS: You can also manually remove the watermark or customize watermark using following tutorial:

Show Your Desired Text on Desktop by Customizing Windows Build Number